Keep only essential files: sources, headers, build system, and licenses.

+++ /dev/null

-aho-corasick

-==

-

-Aho-Corasick parallel string search, using interleaved arrays.

-

-Mischa Sandberg mischasan@gmail.com

-

-ACISM is an implementation of Aho-Corasick parallel string search,

-using an Interleaved State-transition Matrix.

-It combines the fastest possible Aho-Corasick implementation,

-with the smallest possible data structure (!).

-

-FEATURES

---------

-

-* Fast. No hashing, no tree traversal; just a straight look-up equivalent to

- matrix[state, input-byte] per input character.

-

-* Tiny. On average, the whole data structure (mostly the array) takes about 2-3 bytes per

- input pattern byte. The original set of pattern strings can be reverse-generated from the machine.

-

-* Shareable. The state machine contains no pointers, so it can be compiled once,

- then memory-mapped by many processes.

-

-* Searches byte vectors, not null-terminated strings.

- Suitable for searching machine code as much as searching text.

-

-* DOS-proof. Well, that's an attribute of Aho-Corasick,

- so no real points for that.

-

-* Stream-ready. The state can be saved between calls to search data.

-

-DOCUMENTATION

--------------

-

-The GoogleDocs description is at http://goo.gl/lE6zG

-I originally called it "psearch", but found that name was overused by other authors.

-

-LICENSE

--------

-

-Though I've had strong suggestions to go with BSD license, I'm going with GPL2 until I figure out

-how to keep in touch with people who download and use the code. Hence the "CONTACT ME IF..." line in the license.

-

-GETTING STARTED

----------------

-

-Download the source, type "gmake".

-"gmake install" exports lib/libacism.a, include/acism.h and bin/acism_x.

-"acism_x.c" is a good example of calling acism_create and acism_scan/acism_more.

-

-(If you're interested in the GNUmakefile and rules.mk,

- check my blog posts on non-recursive make, at mischasan.wordpress.com.)

-

-HISTORY

--------

-

-The interleaved-array approach was tried and discarded in the late 70's, because the compile time was O(n^2).

-acism_create beats the problem with a "hint" array that tracks the restart points for searches.

-That, plus discarding the original idea of how to get maximal density, resulted in the tiny-fast win-win.

-

-ACKNOWLEDGEMENTS

-----------------

-

-I'd like to thank Mike Shannon, who wanted to see a machine built to make best use of L1/L2 cache.

-The change to do that doubled performance on hardware with a much larger cache than the matrix.

-Go figure.

+++ /dev/null

-<p align="center"><img src="scripts/data/logo/logo_1.svg"></p>

-<b>

-<table>

- <tr>

- <td>

- master branch

- </td>

- <td>

- Windows <a href="https://ci.appveyor.com/project/onqtam/doctest/branch/master"><img src="https://ci.appveyor.com/api/projects/status/j89qxtahyw1dp4gd/branch/master?svg=true"></a>

- </td>

- <td>

- All <a href="https://github.com/onqtam/doctest/actions?query=branch%3Amaster"><img src="https://github.com/onqtam/doctest/workflows/CI/badge.svg?branch=master"></a>

- </td>

- <td>

- <a href="https://coveralls.io/github/onqtam/doctest?branch=master"><img src="https://coveralls.io/repos/github/onqtam/doctest/badge.svg?branch=master"></a>

- </td>

- <!--

- <td>

- <a href="https://scan.coverity.com/projects/onqtam-doctest"><img src="https://scan.coverity.com/projects/7865/badge.svg"></a>

- </td>

- -->

- </tr>

- <tr>

- <td>

- dev branch

- </td>

- <td>

- Windows <a href="https://ci.appveyor.com/project/onqtam/doctest/branch/dev"><img src="https://ci.appveyor.com/api/projects/status/j89qxtahyw1dp4gd/branch/dev?svg=true"></a>

- </td>

- <td>

- All <a href="https://github.com/onqtam/doctest/actions?query=branch%3Adev"><img src="https://github.com/onqtam/doctest/workflows/CI/badge.svg?branch=dev"></a>

- </td>

- <td>

- <a href="https://coveralls.io/github/onqtam/doctest?branch=dev"><img src="https://coveralls.io/repos/github/onqtam/doctest/badge.svg?branch=dev"></a>

- </td>

- <!--

- <td>

- </td>

- -->

- </tr>

-</table>

-</b>

-

-**doctest** is a new C++ testing framework but is by far the fastest both in compile times (by [**orders of magnitude**](doc/markdown/benchmarks.md)) and runtime compared to other feature-rich alternatives. It brings the ability of compiled languages such as [**D**](https://dlang.org/spec/unittest.html) / [**Rust**](https://doc.rust-lang.org/book/second-edition/ch11-00-testing.html) / [**Nim**](https://nim-lang.org/docs/unittest.html) to have tests written directly in the production code thanks to a fast, transparent and flexible test runner with a clean interface.

-

-[](https://en.wikipedia.org/wiki/C%2B%2B#Standardization)

-[](https://opensource.org/licenses/MIT)

-[](https://github.com/onqtam/doctest/releases)

-[](https://raw.githubusercontent.com/onqtam/doctest/master/doctest/doctest.h)

-[](https://bestpractices.coreinfrastructure.org/projects/503)

-[](https://lgtm.com/projects/g/onqtam/doctest/context:cpp)

-[](https://gitter.im/onqtam/doctest?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge&utm_content=badge)

-[](https://wandbox.org/permlink/nJIibfbivG7BG7r1)

-<!--

-[](https://isocpp.org/)

-[](https://github.com/onqtam/doctest/blob/master/doc/markdown/readme.md#reference)

--->

-

-[<img src="https://cloud.githubusercontent.com/assets/8225057/5990484/70413560-a9ab-11e4-8942-1a63607c0b00.png" align="right">](http://www.patreon.com/onqtam)

-

-The framework is and will stay free but needs your support to sustain its development. There are lots of <a href="doc/markdown/roadmap.md"><b>new features</b></a> and maintenance to do. If you work for a company using **doctest** or have the means to do so, please consider financial support. Monthly donations via Patreon and one-offs via PayPal.

-

-[<img src="https://www.paypalobjects.com/en_US/i/btn/btn_donate_LG.gif" align="right">](https://www.paypal.me/onqtam/10)

-

-A complete example with a self-registering test that compiles to an executable looks like this:

-

-

-

-There are many C++ testing frameworks - [Catch](https://github.com/catchorg/Catch2), [Boost.Test](http://www.boost.org/doc/libs/1_64_0/libs/test/doc/html/index.html), [UnitTest++](https://github.com/unittest-cpp/unittest-cpp), [cpputest](https://github.com/cpputest/cpputest), [googletest](https://github.com/google/googletest) and many [other](https://en.wikipedia.org/wiki/List_of_unit_testing_frameworks#C.2B.2B).

-

-The **key** differences between it and other testing frameworks are that it is light and unintrusive:

-- Ultra light on compile times both in terms of [**including the header**](doc/markdown/benchmarks.md#cost-of-including-the-header) and writing [**thousands of asserts**](doc/markdown/benchmarks.md#cost-of-an-assertion-macro)

-- Doesn't produce any warnings even on the [**most aggressive**](scripts/cmake/common.cmake#L84) warning levels for **MSVC**/**GCC**/**Clang**

-- Offers a way to remove **everything** testing-related from the binary with the [**```DOCTEST_CONFIG_DISABLE```**](doc/markdown/configuration.md#doctest_config_disable) identifier

-- [**thread-safe**](doc/markdown/faq.md#is-doctest-thread-aware) - asserts (and logging) can be used from multiple threads spawned from a single test case - [**example**](examples/all_features/concurrency.cpp)

-- asserts can be used [**outside of a testing context**](doc/markdown/assertions.md#using-asserts-out-of-a-testing-context) - as a general purpose assert library - [**example**](examples/all_features/asserts_used_outside_of_tests.cpp)

-- Doesn't pollute the global namespace (everything is in namespace ```doctest```) and doesn't drag **any** headers with it

-- Very [**portable**](doc/markdown/features.md#extremely-portable) C++11 (use tag [**1.2.9**](https://github.com/onqtam/doctest/tree/1.2.9) for C++98) with over 180 different CI builds (static analysis, sanitizers...)

-- binaries (exe/dll) can use the test runner of another binary - so tests end up in a single registry - [**example**](examples/executable_dll_and_plugin/)

-

-

-

-This allows the framework to be used in more ways than any other - tests can be written directly in the production code!

-

-*Tests can be considered a form of documentation and should be able to reside near the production code which they test.*

-

-- This makes the barrier for writing tests **much lower** - you don't have to: **1)** make a separate source file **2)** include a bunch of stuff in it **3)** add it to the build system and **4)** add it to source control - You can just write the tests for a class or a piece of functionality at the bottom of its source file - or even header file!

-- Tests in the production code can be thought of as documentation or up-to-date comments - showing the use of APIs

-- Testing internals that are not exposed through the public API and headers is no longer a mind-bending exercise

-- [**Test-driven development**](https://en.wikipedia.org/wiki/Test-driven_development) in C++ has never been easier!

-

-The framework can be used like any other if you don't want/need to mix production code and tests - check out the [**features**](doc/markdown/features.md).

-

-**doctest** is modeled after [**Catch**](https://github.com/catchorg/Catch2) and some parts of the code have been taken directly - check out [**the differences**](doc/markdown/faq.md#how-is-doctest-different-from-catch).

-

-[This table](https://github.com/martinmoene/catch-lest-other-comparison) compares **doctest** / [**Catch**](https://github.com/catchorg/Catch2) / [**lest**](https://github.com/martinmoene/lest) which are all very similar.

-

-Checkout the [**CppCon 2017 talk**](https://cppcon2017.sched.com/event/BgsI/mix-tests-and-production-code-with-doctest-implementing-and-using-the-fastest-modern-c-testing-framework) on [**YouTube**](https://www.youtube.com/watch?v=eH1CxEC29l8) to get a better understanding of how the framework works and read about how to use it in [**the JetBrains article**](https://blog.jetbrains.com/rscpp/better-ways-testing-with-doctest/) - highlighting the unique aspects of the framework! On a short description on how to use the framework along production code you could refer to [**this GitHub issue**](https://github.com/onqtam/doctest/issues/252). There is also an [**older article**](https://accu.org/var/uploads/journals/Overload137.pdf) in the february edition of ACCU Overload 2017.

-

-[](https://www.youtube.com/watch?v=eH1CxEC29l8)

-

-Documentation

--------------

-

-Project:

-

-- [Features and design goals](doc/markdown/features.md) - the complete list of features

-- [Roadmap](doc/markdown/roadmap.md) - upcoming features

-- [Benchmarks](doc/markdown/benchmarks.md) - compile-time and runtime supremacy

-- [Contributing](CONTRIBUTING.md) - how to make a proper pull request

-- [Changelog](CHANGELOG.md) - generated changelog based on closed issues/PRs

-

-Usage:

-

-- [Tutorial](doc/markdown/tutorial.md) - make sure you have read it before the other parts of the documentation

-- [Assertion macros](doc/markdown/assertions.md)

-- [Test cases, subcases and test fixtures](doc/markdown/testcases.md)

-- [Parameterized test cases](doc/markdown/parameterized-tests.md)

-- [Command line](doc/markdown/commandline.md)

-- [Logging macros](doc/markdown/logging.md)

-- [```main()``` entry point](doc/markdown/main.md)

-- [Configuration](doc/markdown/configuration.md)

-- [String conversions](doc/markdown/stringification.md)

-- [Reporters](doc/markdown/reporters.md)

-- [Extensions](doc/markdown/extensions.md)

-- [FAQ](doc/markdown/faq.md)

-- [Build systems](doc/markdown/build-systems.md)

-- [Examples](examples)

-

-Contributing

-------------

-

-[<img src="https://cloud.githubusercontent.com/assets/8225057/5990484/70413560-a9ab-11e4-8942-1a63607c0b00.png" align="right">](http://www.patreon.com/onqtam)

-

-Support the development of the project with donations! There is a list of planned features which are all important and big - see the [**roadmap**](doc/markdown/roadmap.md). I took a break from working in the industry to make open source software so every cent is a big deal.

-

-[<img src="https://www.paypalobjects.com/en_US/i/btn/btn_donate_LG.gif" align="right">](https://www.paypal.me/onqtam/10)

-

-If you work for a company using **doctest** or have the means to do so, please consider financial support.

-

-Contributions in the form of issues and pull requests are welcome as well - check out the [**Contributing**](CONTRIBUTING.md) page.

-

-Stargazers over time

-------------

-

-[](https://starcharts.herokuapp.com/onqtam/doctest)

-

-Logo

-------------

-

-The [logo](scripts/data/logo) is licensed under a Creative Commons Attribution 4.0 International License. Copyright © 2019 [area55git](https://github.com/area55git) [](https://creativecommons.org/licenses/by/4.0/)

-

-<p align="center"><img src="scripts/data/logo/icon_2.svg"></p>

+++ /dev/null

-2.4.11

\ No newline at end of file

+++ /dev/null

-FEATURES

-1) Support new http-protocol (/checkv2)

-2) Return action, symbols, symbol options, messages, scan time

-

-INSTALL

-

-1) Get cJSON.c, cJSON.h, put to dlfunc src dir (https://github.com/DaveGamble/cJSON)

-2) Compile dlfunc library:

- cc rspamd.c -fPIC -fpic -shared -I/root/rpmbuild/BUILD/exim-4.89/build-Linux-x86_64/ -o exim-rspamd-http-dlfunc.so

-3) See exim-example.txt for exim configure

+++ /dev/null

-acl_smtp_data = acl_check_data

-

-.....

-

-acl_check_data:

-

-.....

-

-# RSPAMD: START

- warn

- !authenticated = *

- add_header = X-Spam-Checker-Version: Rspamd

- add_header = :at_start:Authentication-Results: ip=$sender_host_address:$sender_host_port, host=$sender_host_name, helo=$sender_helo_name, mailfrom=$sender_address

- warn

- #spam = nobody:true

- #set acl_m0_rspamd = $spam_report

- set acl_m0_rspamd = ${dlfunc{/usr/local/libexec/exim/exim-rspamd-http-dlfunc.so}{rspamd}{/var/run/rspamd/rspamd.sock}{defer_ok}}

- accept

- authenticated = *

- warn

- condition = ${if eq{$acl_m0_rspamd}{}}

- logwrite = RSPAMD check failed

- add_header = X-Spam-Info: Check failed

- warn

- condition = ${if match{$acl_m0_rspamd}{\N^rspamd dlfunc:\s*\N}{yes}{no}}

- logwrite = RSPAMD check defer: ${sg{$acl_m0_rspamd}{\N^rspamd dlfunc:\s*\N}{}}

- add_header = X-Spam-Info: Check deffered

-

- warn

- remove_header = X-Spam-Checker-Version:X-Spam-Status:X-Spam-Info:X-Spam-Result

- set acl_m1 = No

- warn

- condition = ${if !eq{$acl_m0_rspamd}{}}

- set acl_m1_yesno = ${if match{$acl_m0_rspamd}{\NAction: (.+?)\n\N}{$1}{}}

- set acl_m2_status = ${if eq{$acl_m1_yesno}{reject}{REJECT}{\

- ${if eq{$acl_m1_yesno}{add header}{PROBABLY}{\

- ${if eq{$acl_m1_yesno}{rewrite subject}{PROBABLY}{\

- ${if eq{$acl_m1_yesno}{soft reject}{SOFT}{\

- ${if eq{$acl_m1_yesno}{greylist}{GREYLIST}{NO}}\

- }}\

- }}\

- }}\

- }}

- set acl_m1_yesno = ${if eq{$acl_m1_yesno}{}{unknown}{\

- ${if eq{$acl_m1_yesno}{reject}{Yes}{\

- ${if eq{$acl_m1_yesno}{add header}{Yes}{\

- ${if eq{$acl_m1_yesno}{rewrite subject}{Yes}{\

- ${if eq{$acl_m1_yesno}{soft reject}{Probably}{\

- ${if eq{$acl_m1_yesno}{greylist}{Probably}{No}}\

- }}\

- }}\

- }}\

- }}\

- }}

- #logwrite = RSPAMD: status: $acl_m2_status

- #logwrite = RSPAMD DEBUG: $acl_m0_rspamd

- set acl_m0_rspamd = ${sg{$acl_m0_rspamd}{ Action:.+\n}{}}

- warn

- condition = ${if !eq{$acl_m0_rspamd}{}}

- logwrite = RSPAMD: $acl_m2_status, $acl_m0_rspamd

- add_header = X-Spam-Result: $acl_m0_rspamd

- add_header = X-Spam-Status: $acl_m1_yesno

- defer

- condition = ${if eq{$acl_m2_status}{GREYLIST}}

- log_message = Rspamd $acl_m2_status

- message = Try again later. Message greylisted

- defer

- condition = ${if eq{$acl_m2_status}{SOFT}}

- log_message = Rspamd $acl_m2_status

- message = Try again later. Message previously greylisted

- deny

- condition = ${if eq{$acl_m2_status}{REJECT}}

- log_message = Rspamd $acl_m2_status

- message = This message detected as SPAM and rejected

-# RSPAMD: END

+++ /dev/null

-<img src="https://user-images.githubusercontent.com/576385/156254208-f5b743a9-88cf-439d-b0c0-923d53e8d551.png" alt="{fmt}" width="25%"/>

-

-[](https://github.com/fmtlib/fmt/actions?query=workflow%3Alinux)

-[](https://github.com/fmtlib/fmt/actions?query=workflow%3Amacos)

-[](https://github.com/fmtlib/fmt/actions?query=workflow%3Awindows)

-[](https://bugs.chromium.org/p/oss-fuzz/issues/list?\%0Acolspec=ID%20Type%20Component%20Status%20Proj%20Reported%20Owner%20\%0ASummary&q=proj%3Dfmt&can=1)

-[](https://stackoverflow.com/questions/tagged/fmt)

-[](https://securityscorecards.dev/viewer/?uri=github.com/fmtlib/fmt)

-

-**{fmt}** is an open-source formatting library providing a fast and safe

-alternative to C stdio and C++ iostreams.

-

-If you like this project, please consider donating to one of the funds

-that help victims of the war in Ukraine: <https://www.stopputin.net/>.

-

-[Documentation](https://fmt.dev)

-

-[Cheat Sheets](https://hackingcpp.com/cpp/libs/fmt.html)

-

-Q&A: ask questions on [StackOverflow with the tag

-fmt](https://stackoverflow.com/questions/tagged/fmt).

-

-Try {fmt} in [Compiler Explorer](https://godbolt.org/z/8Mx1EW73v).

-

-# Features

-

-- Simple [format API](https://fmt.dev/latest/api.html) with positional

- arguments for localization

-- Implementation of [C++20

- std::format](https://en.cppreference.com/w/cpp/utility/format) and

- [C++23 std::print](https://en.cppreference.com/w/cpp/io/print)

-- [Format string syntax](https://fmt.dev/latest/syntax.html) similar

- to Python\'s

- [format](https://docs.python.org/3/library/stdtypes.html#str.format)

-- Fast IEEE 754 floating-point formatter with correct rounding,

- shortness and round-trip guarantees using the

- [Dragonbox](https://github.com/jk-jeon/dragonbox) algorithm

-- Portable Unicode support

-- Safe [printf

- implementation](https://fmt.dev/latest/api.html#printf-formatting)

- including the POSIX extension for positional arguments

-- Extensibility: [support for user-defined

- types](https://fmt.dev/latest/api.html#formatting-user-defined-types)

-- High performance: faster than common standard library

- implementations of `(s)printf`, iostreams, `to_string` and

- `to_chars`, see [Speed tests](#speed-tests) and [Converting a

- hundred million integers to strings per

- second](http://www.zverovich.net/2020/06/13/fast-int-to-string-revisited.html)

-- Small code size both in terms of source code with the minimum

- configuration consisting of just three files, `core.h`, `format.h`

- and `format-inl.h`, and compiled code; see [Compile time and code

- bloat](#compile-time-and-code-bloat)

-- Reliability: the library has an extensive set of

- [tests](https://github.com/fmtlib/fmt/tree/master/test) and is

- [continuously fuzzed](https://bugs.chromium.org/p/oss-fuzz/issues/list?colspec=ID%20Type%20Component%20Status%20Proj%20Reported%20Owner%20Summary&q=proj%3Dfmt&can=1)

-- Safety: the library is fully type-safe, errors in format strings can

- be reported at compile time, automatic memory management prevents

- buffer overflow errors

-- Ease of use: small self-contained code base, no external

- dependencies, permissive MIT

- [license](https://github.com/fmtlib/fmt/blob/master/LICENSE.rst)

-- [Portability](https://fmt.dev/latest/index.html#portability) with

- consistent output across platforms and support for older compilers

-- Clean warning-free codebase even on high warning levels such as

- `-Wall -Wextra -pedantic`

-- Locale independence by default

-- Optional header-only configuration enabled with the

- `FMT_HEADER_ONLY` macro

-

-See the [documentation](https://fmt.dev) for more details.

-

-# Examples

-

-**Print to stdout** ([run](https://godbolt.org/z/Tevcjh))

-

-``` c++

-#include <fmt/core.h>

-

-int main() {

- fmt::print("Hello, world!\n");

-}

-```

-

-**Format a string** ([run](https://godbolt.org/z/oK8h33))

-

-``` c++

-std::string s = fmt::format("The answer is {}.", 42);

-// s == "The answer is 42."

-```

-

-**Format a string using positional arguments**

-([run](https://godbolt.org/z/Yn7Txe))

-

-``` c++

-std::string s = fmt::format("I'd rather be {1} than {0}.", "right", "happy");

-// s == "I'd rather be happy than right."

-```

-

-**Print dates and times** ([run](https://godbolt.org/z/c31ExdY3W))

-

-``` c++

-#include <fmt/chrono.h>

-

-int main() {

- auto now = std::chrono::system_clock::now();

- fmt::print("Date and time: {}\n", now);

- fmt::print("Time: {:%H:%M}\n", now);

-}

-```

-

-Output:

-

- Date and time: 2023-12-26 19:10:31.557195597

- Time: 19:10

-

-**Print a container** ([run](https://godbolt.org/z/MxM1YqjE7))

-

-``` c++

-#include <vector>

-#include <fmt/ranges.h>

-

-int main() {

- std::vector<int> v = {1, 2, 3};

- fmt::print("{}\n", v);

-}

-```

-

-Output:

-

- [1, 2, 3]

-

-**Check a format string at compile time**

-

-``` c++

-std::string s = fmt::format("{:d}", "I am not a number");

-```

-

-This gives a compile-time error in C++20 because `d` is an invalid

-format specifier for a string.

-

-**Write a file from a single thread**

-

-``` c++

-#include <fmt/os.h>

-

-int main() {

- auto out = fmt::output_file("guide.txt");

- out.print("Don't {}", "Panic");

-}

-```

-

-This can be [5 to 9 times faster than

-fprintf](http://www.zverovich.net/2020/08/04/optimal-file-buffer-size.html).

-

-**Print with colors and text styles**

-

-``` c++

-#include <fmt/color.h>

-

-int main() {

- fmt::print(fg(fmt::color::crimson) | fmt::emphasis::bold,

- "Hello, {}!\n", "world");

- fmt::print(fg(fmt::color::floral_white) | bg(fmt::color::slate_gray) |

- fmt::emphasis::underline, "Olá, {}!\n", "Mundo");

- fmt::print(fg(fmt::color::steel_blue) | fmt::emphasis::italic,

- "你好{}!\n", "世界");

-}

-```

-

-Output on a modern terminal with Unicode support:

-

-

-

-# Benchmarks

-

-## Speed tests

-

-| Library | Method | Run Time, s |

-|-------------------|---------------|-------------|

-| libc | printf | 0.91 |

-| libc++ | std::ostream | 2.49 |

-| {fmt} 9.1 | fmt::print | 0.74 |

-| Boost Format 1.80 | boost::format | 6.26 |

-| Folly Format | folly::format | 1.87 |

-

-{fmt} is the fastest of the benchmarked methods, \~20% faster than

-`printf`.

-

-The above results were generated by building `tinyformat_test.cpp` on

-macOS 12.6.1 with `clang++ -O3 -DNDEBUG -DSPEED_TEST -DHAVE_FORMAT`, and

-taking the best of three runs. In the test, the format string

-`"%0.10f:%04d:%+g:%s:%p:%c:%%\n"` or equivalent is filled 2,000,000

-times with output sent to `/dev/null`; for further details refer to the

-[source](https://github.com/fmtlib/format-benchmark/blob/master/src/tinyformat-test.cc).

-

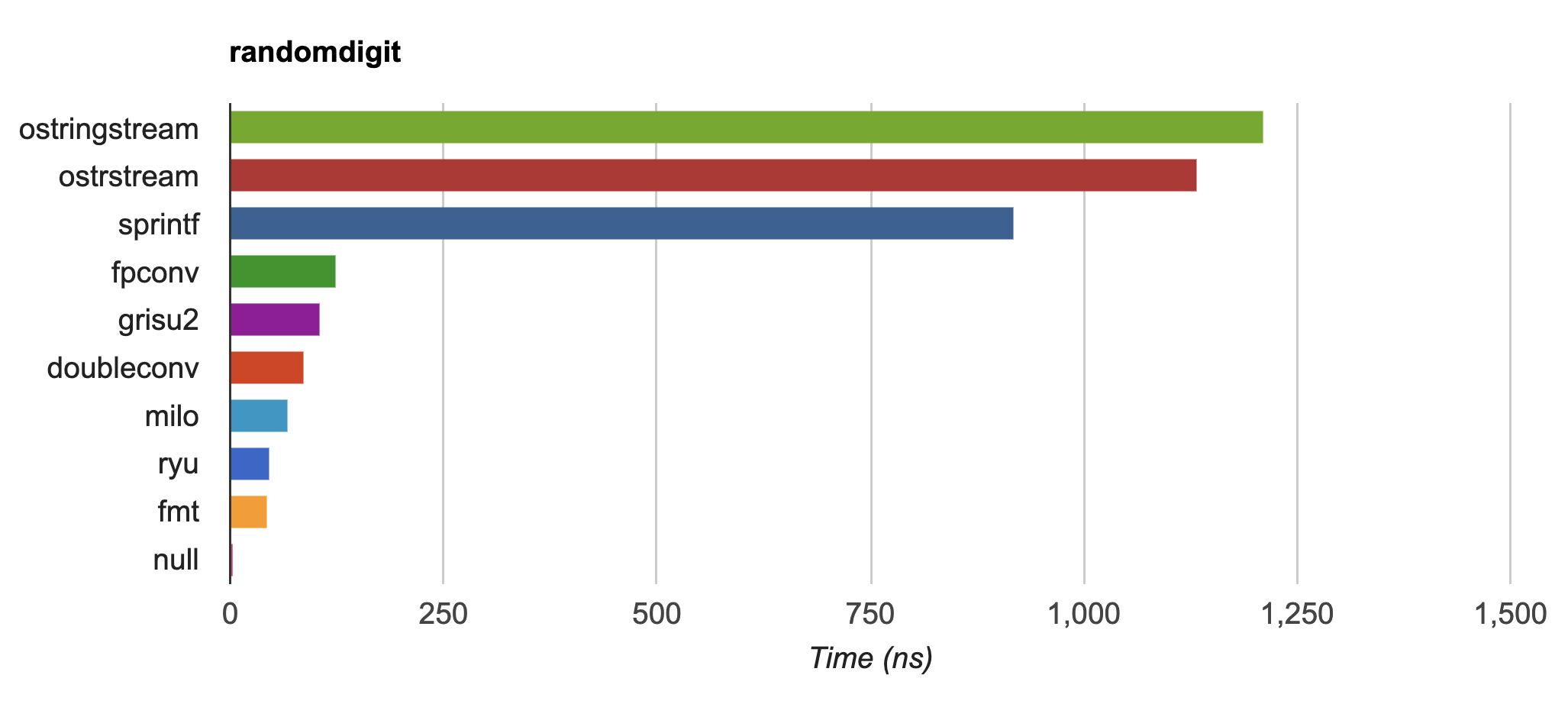

-{fmt} is up to 20-30x faster than `std::ostringstream` and `sprintf` on

-IEEE754 `float` and `double` formatting

-([dtoa-benchmark](https://github.com/fmtlib/dtoa-benchmark)) and faster

-than [double-conversion](https://github.com/google/double-conversion)

-and [ryu](https://github.com/ulfjack/ryu):

-

-[](https://fmt.dev/unknown_mac64_clang12.0.html)

-

-## Compile time and code bloat

-

-The script [bloat-test.py][test] from [format-benchmark][bench] tests compile

-time and code bloat for nontrivial projects. It generates 100 translation units

-and uses `printf()` or its alternative five times in each to simulate a

-medium-sized project. The resulting executable size and compile time (Apple

-clang version 15.0.0 (clang-1500.1.0.2.5), macOS Sonoma, best of three) is shown

-in the following tables.

-

-[test]: https://github.com/fmtlib/format-benchmark/blob/master/bloat-test.py

-[bench]: https://github.com/fmtlib/format-benchmark

-

-**Optimized build (-O3)**

-

-| Method | Compile Time, s | Executable size, KiB | Stripped size, KiB |

-|---------------|-----------------|----------------------|--------------------|

-| printf | 1.6 | 54 | 50 |

-| IOStreams | 25.9 | 98 | 84 |

-| fmt 83652df | 4.8 | 54 | 50 |

-| tinyformat | 29.1 | 161 | 136 |

-| Boost Format | 55.0 | 530 | 317 |

-

-{fmt} is fast to compile and is comparable to `printf` in terms of per-call

-binary size (within a rounding error on this system).

-

-**Non-optimized build**

-

-| Method | Compile Time, s | Executable size, KiB | Stripped size, KiB |

-|---------------|-----------------|----------------------|--------------------|

-| printf | 1.4 | 54 | 50 |

-| IOStreams | 23.4 | 92 | 68 |

-| {fmt} 83652df | 4.4 | 89 | 85 |

-| tinyformat | 24.5 | 204 | 161 |

-| Boost Format | 36.4 | 831 | 462 |

-

-`libc`, `lib(std)c++`, and `libfmt` are all linked as shared libraries

-to compare formatting function overhead only. Boost Format is a

-header-only library so it doesn\'t provide any linkage options.

-

-## Running the tests

-

-Please refer to [Building the

-library](https://fmt.dev/latest/usage.html#building-the-library) for

-instructions on how to build the library and run the unit tests.

-

-Benchmarks reside in a separate repository,

-[format-benchmarks](https://github.com/fmtlib/format-benchmark), so to

-run the benchmarks you first need to clone this repository and generate

-Makefiles with CMake:

-

- $ git clone --recursive https://github.com/fmtlib/format-benchmark.git

- $ cd format-benchmark

- $ cmake .

-

-Then you can run the speed test:

-

- $ make speed-test

-

-or the bloat test:

-

- $ make bloat-test

-

-# Migrating code

-

-[clang-tidy](https://clang.llvm.org/extra/clang-tidy/) v18 provides the

-[modernize-use-std-print](https://clang.llvm.org/extra/clang-tidy/checks/modernize/use-std-print.html)

-check that is capable of converting occurrences of `printf` and

-`fprintf` to `fmt::print` if configured to do so. (By default it

-converts to `std::print`.)

-

-# Notable projects using this library

-

-- [0 A.D.](https://play0ad.com/): a free, open-source, cross-platform

- real-time strategy game

-- [AMPL/MP](https://github.com/ampl/mp): an open-source library for

- mathematical programming

-- [Apple's FoundationDB](https://github.com/apple/foundationdb): an open-source,

- distributed, transactional key-value store

-- [Aseprite](https://github.com/aseprite/aseprite): animated sprite

- editor & pixel art tool

-- [AvioBook](https://www.aviobook.aero/en): a comprehensive aircraft

- operations suite

-- [Blizzard Battle.net](https://battle.net/): an online gaming

- platform

-- [Celestia](https://celestia.space/): real-time 3D visualization of

- space

-- [Ceph](https://ceph.com/): a scalable distributed storage system

-- [ccache](https://ccache.dev/): a compiler cache

-- [ClickHouse](https://github.com/ClickHouse/ClickHouse): an

- analytical database management system

-- [Contour](https://github.com/contour-terminal/contour/): a modern

- terminal emulator

-- [CUAUV](https://cuauv.org/): Cornell University\'s autonomous

- underwater vehicle

-- [Drake](https://drake.mit.edu/): a planning, control, and analysis

- toolbox for nonlinear dynamical systems (MIT)

-- [Envoy](https://lyft.github.io/envoy/): C++ L7 proxy and

- communication bus (Lyft)

-- [FiveM](https://fivem.net/): a modification framework for GTA V

-- [fmtlog](https://github.com/MengRao/fmtlog): a performant

- fmtlib-style logging library with latency in nanoseconds

-- [Folly](https://github.com/facebook/folly): Facebook open-source

- library

-- [GemRB](https://gemrb.org/): a portable open-source implementation

- of Bioware's Infinity Engine

-- [Grand Mountain

- Adventure](https://store.steampowered.com/app/1247360/Grand_Mountain_Adventure/):

- a beautiful open-world ski & snowboarding game

-- [HarpyWar/pvpgn](https://github.com/pvpgn/pvpgn-server): Player vs

- Player Gaming Network with tweaks

-- [KBEngine](https://github.com/kbengine/kbengine): an open-source

- MMOG server engine

-- [Keypirinha](https://keypirinha.com/): a semantic launcher for

- Windows

-- [Kodi](https://kodi.tv/) (formerly xbmc): home theater software

-- [Knuth](https://kth.cash/): high-performance Bitcoin full-node

-- [libunicode](https://github.com/contour-terminal/libunicode/): a

- modern C++17 Unicode library

-- [MariaDB](https://mariadb.org/): relational database management

- system

-- [Microsoft Verona](https://github.com/microsoft/verona): research

- programming language for concurrent ownership

-- [MongoDB](https://mongodb.com/): distributed document database

-- [MongoDB Smasher](https://github.com/duckie/mongo_smasher): a small

- tool to generate randomized datasets

-- [OpenSpace](https://openspaceproject.com/): an open-source

- astrovisualization framework

-- [PenUltima Online (POL)](https://www.polserver.com/): an MMO server,

- compatible with most Ultima Online clients

-- [PyTorch](https://github.com/pytorch/pytorch): an open-source

- machine learning library

-- [quasardb](https://www.quasardb.net/): a distributed,

- high-performance, associative database

-- [Quill](https://github.com/odygrd/quill): asynchronous low-latency

- logging library

-- [QKW](https://github.com/ravijanjam/qkw): generalizing aliasing to

- simplify navigation, and execute complex multi-line terminal

- command sequences

-- [redis-cerberus](https://github.com/HunanTV/redis-cerberus): a Redis

- cluster proxy

-- [redpanda](https://vectorized.io/redpanda): a 10x faster Kafka®

- replacement for mission-critical systems written in C++

-- [rpclib](http://rpclib.net/): a modern C++ msgpack-RPC server and

- client library

-- [Salesforce Analytics

- Cloud](https://www.salesforce.com/analytics-cloud/overview/):

- business intelligence software

-- [Scylla](https://www.scylladb.com/): a Cassandra-compatible NoSQL

- data store that can handle 1 million transactions per second on a

- single server

-- [Seastar](http://www.seastar-project.org/): an advanced, open-source

- C++ framework for high-performance server applications on modern

- hardware

-- [spdlog](https://github.com/gabime/spdlog): super fast C++ logging

- library

-- [Stellar](https://www.stellar.org/): financial platform

-- [Touch Surgery](https://www.touchsurgery.com/): surgery simulator

-- [TrinityCore](https://github.com/TrinityCore/TrinityCore):

- open-source MMORPG framework

-- [🐙 userver framework](https://userver.tech/): open-source

- asynchronous framework with a rich set of abstractions and database

- drivers

-- [Windows Terminal](https://github.com/microsoft/terminal): the new

- Windows terminal

-

-[More\...](https://github.com/search?q=fmtlib&type=Code)

-

-If you are aware of other projects using this library, please let me

-know by [email](mailto:victor.zverovich@gmail.com) or by submitting an

-[issue](https://github.com/fmtlib/fmt/issues).

-

-# Motivation

-

-So why yet another formatting library?

-

-There are plenty of methods for doing this task, from standard ones like

-the printf family of function and iostreams to Boost Format and

-FastFormat libraries. The reason for creating a new library is that

-every existing solution that I found either had serious issues or

-didn\'t provide all the features I needed.

-

-## printf

-

-The good thing about `printf` is that it is pretty fast and readily

-available being a part of the C standard library. The main drawback is

-that it doesn\'t support user-defined types. `printf` also has safety

-issues although they are somewhat mitigated with [\_\_attribute\_\_

-((format (printf,

-\...))](https://gcc.gnu.org/onlinedocs/gcc/Function-Attributes.html) in

-GCC. There is a POSIX extension that adds positional arguments required

-for

-[i18n](https://en.wikipedia.org/wiki/Internationalization_and_localization)

-to `printf` but it is not a part of C99 and may not be available on some

-platforms.

-

-## iostreams

-

-The main issue with iostreams is best illustrated with an example:

-

-``` c++

-std::cout << std::setprecision(2) << std::fixed << 1.23456 << "\n";

-```

-

-which is a lot of typing compared to printf:

-

-``` c++

-printf("%.2f\n", 1.23456);

-```

-

-Matthew Wilson, the author of FastFormat, called this \"chevron hell\".

-iostreams don\'t support positional arguments by design.

-

-The good part is that iostreams support user-defined types and are safe

-although error handling is awkward.

-

-## Boost Format

-

-This is a very powerful library that supports both `printf`-like format

-strings and positional arguments. Its main drawback is performance.

-According to various benchmarks, it is much slower than other methods

-considered here. Boost Format also has excessive build times and severe

-code bloat issues (see [Benchmarks](#benchmarks)).

-

-## FastFormat

-

-This is an interesting library that is fast, safe and has positional

-arguments. However, it has significant limitations, citing its author:

-

-> Three features that have no hope of being accommodated within the

-> current design are:

->

-> - Leading zeros (or any other non-space padding)

-> - Octal/hexadecimal encoding

-> - Runtime width/alignment specification

-

-It is also quite big and has a heavy dependency, on STLSoft, which might be

-too restrictive for use in some projects.

-

-## Boost Spirit.Karma

-

-This is not a formatting library but I decided to include it here for

-completeness. As iostreams, it suffers from the problem of mixing

-verbatim text with arguments. The library is pretty fast, but slower on

-integer formatting than `fmt::format_to` with format string compilation

-on Karma\'s own benchmark, see [Converting a hundred million integers to

-strings per

-second](http://www.zverovich.net/2020/06/13/fast-int-to-string-revisited.html).

-

-# License

-

-{fmt} is distributed under the MIT

-[license](https://github.com/fmtlib/fmt/blob/master/LICENSE).

-

-# Documentation License

-

-The [Format String Syntax](https://fmt.dev/latest/syntax.html) section

-in the documentation is based on the one from Python [string module

-documentation](https://docs.python.org/3/library/string.html#module-string).

-For this reason, the documentation is distributed under the Python

-Software Foundation license available in

-[doc/python-license.txt](https://raw.github.com/fmtlib/fmt/master/doc/python-license.txt).

-It only applies if you distribute the documentation of {fmt}.

-

-# Maintainers

-

-The {fmt} library is maintained by Victor Zverovich

-([vitaut](https://github.com/vitaut)) with contributions from many other

-people. See

-[Contributors](https://github.com/fmtlib/fmt/graphs/contributors) and

-[Releases](https://github.com/fmtlib/fmt/releases) for some of the

-names. Let us know if your contribution is not listed or mentioned

-incorrectly and we\'ll make it right.

-

-# Security Policy

-

-To report a security issue, please disclose it at [security

-advisory](https://github.com/fmtlib/fmt/security/advisories/new).

-

-This project is maintained by a team of volunteers on a

-reasonable-effort basis. As such, please give us at least *90* days to

-work on a fix before public exposure.

+++ /dev/null

-serge-sans-paille <sguelton@quarkslab.com>

-Jérôme Dumesnil <jerome.dumesnil@gmail.com>

-Chris Beck <chbeck@tesla.com>

+++ /dev/null

-[](https://travis-ci.org/redis/hiredis)

-

-# HIREDIS

-

-Hiredis is a minimalistic C client library for the [Redis](http://redis.io/) database.

-

-It is minimalistic because it just adds minimal support for the protocol, but

-at the same time it uses a high level printf-alike API in order to make it

-much higher level than otherwise suggested by its minimal code base and the

-lack of explicit bindings for every Redis command.

-

-Apart from supporting sending commands and receiving replies, it comes with

-a reply parser that is decoupled from the I/O layer. It

-is a stream parser designed for easy reusability, which can for instance be used

-in higher level language bindings for efficient reply parsing.

-

-Hiredis only supports the binary-safe Redis protocol, so you can use it with any

-Redis version >= 1.2.0.

-

-The library comes with multiple APIs. There is the

-*synchronous API*, the *asynchronous API* and the *reply parsing API*.

-

-## UPGRADING

-

-Version 0.9.0 is a major overhaul of hiredis in every aspect. However, upgrading existing

-code using hiredis should not be a big pain. The key thing to keep in mind when

-upgrading is that hiredis >= 0.9.0 uses a `redisContext*` to keep state, in contrast to

-the stateless 0.0.1 that only has a file descriptor to work with.

-

-## Synchronous API

-

-To consume the synchronous API, there are only a few function calls that need to be introduced:

-

-```c

-redisContext *redisConnect(const char *ip, int port);

-void *redisCommand(redisContext *c, const char *format, ...);

-void freeReplyObject(void *reply);

-```

-

-### Connecting

-

-The function `redisConnect` is used to create a so-called `redisContext`. The

-context is where Hiredis holds state for a connection. The `redisContext`

-struct has an integer `err` field that is non-zero when the connection is in

-an error state. The field `errstr` will contain a string with a description of

-the error. More information on errors can be found in the **Errors** section.

-After trying to connect to Redis using `redisConnect` you should

-check the `err` field to see if establishing the connection was successful:

-```c

-redisContext *c = redisConnect("127.0.0.1", 6379);

-if (c != NULL && c->err) {

- printf("Error: %s\n", c->errstr);

- // handle error

-}

-```

-

-### Sending commands

-

-There are several ways to issue commands to Redis. The first that will be introduced is

-`redisCommand`. This function takes a format similar to printf. In the simplest form,

-it is used like this:

-```c

-reply = redisCommand(context, "SET foo bar");

-```

-

-The specifier `%s` interpolates a string in the command, and uses `strlen` to

-determine the length of the string:

-```c

-reply = redisCommand(context, "SET foo %s", value);

-```

-When you need to pass binary safe strings in a command, the `%b` specifier can be

-used. Together with a pointer to the string, it requires a `size_t` length argument

-of the string:

-```c

-reply = redisCommand(context, "SET foo %b", value, (size_t) valuelen);

-```

-Internally, Hiredis splits the command in different arguments and will

-convert it to the protocol used to communicate with Redis.

-One or more spaces separates arguments, so you can use the specifiers

-anywhere in an argument:

-```c

-reply = redisCommand(context, "SET key:%s %s", myid, value);

-```

-

-### Using replies

-

-The return value of `redisCommand` holds a reply when the command was

-successfully executed. When an error occurs, the return value is `NULL` and

-the `err` field in the context will be set (see section on **Errors**).

-Once an error is returned the context cannot be reused and you should set up

-a new connection.

-

-The standard replies that `redisCommand` are of the type `redisReply`. The

-`type` field in the `redisReply` should be used to test what kind of reply

-was received:

-

-* **`REDIS_REPLY_STATUS`**:

- * The command replied with a status reply. The status string can be accessed using `reply->str`.

- The length of this string can be accessed using `reply->len`.

-

-* **`REDIS_REPLY_ERROR`**:

- * The command replied with an error. The error string can be accessed identical to `REDIS_REPLY_STATUS`.

-

-* **`REDIS_REPLY_INTEGER`**:

- * The command replied with an integer. The integer value can be accessed using the

- `reply->integer` field of type `long long`.

-

-* **`REDIS_REPLY_NIL`**:

- * The command replied with a **nil** object. There is no data to access.

-

-* **`REDIS_REPLY_STRING`**:

- * A bulk (string) reply. The value of the reply can be accessed using `reply->str`.

- The length of this string can be accessed using `reply->len`.

-

-* **`REDIS_REPLY_ARRAY`**:

- * A multi bulk reply. The number of elements in the multi bulk reply is stored in

- `reply->elements`. Every element in the multi bulk reply is a `redisReply` object as well

- and can be accessed via `reply->element[..index..]`.

- Redis may reply with nested arrays but this is fully supported.

-

-Replies should be freed using the `freeReplyObject()` function.

-Note that this function will take care of freeing sub-reply objects

-contained in arrays and nested arrays, so there is no need for the user to

-free the sub replies (it is actually harmful and will corrupt the memory).

-

-**Important:** the current version of hiredis (0.10.0) frees replies when the

-asynchronous API is used. This means you should not call `freeReplyObject` when

-you use this API. The reply is cleaned up by hiredis _after_ the callback

-returns. This behavior will probably change in future releases, so make sure to

-keep an eye on the changelog when upgrading (see issue #39).

-

-### Cleaning up

-

-To disconnect and free the context the following function can be used:

-```c

-void redisFree(redisContext *c);

-```

-This function immediately closes the socket and then frees the allocations done in

-creating the context.

-

-### Sending commands (cont'd)

-

-Together with `redisCommand`, the function `redisCommandArgv` can be used to issue commands.

-It has the following prototype:

-```c

-void *redisCommandArgv(redisContext *c, int argc, const char **argv, const size_t *argvlen);

-```

-It takes the number of arguments `argc`, an array of strings `argv` and the lengths of the

-arguments `argvlen`. For convenience, `argvlen` may be set to `NULL` and the function will

-use `strlen(3)` on every argument to determine its length. Obviously, when any of the arguments

-need to be binary safe, the entire array of lengths `argvlen` should be provided.

-

-The return value has the same semantic as `redisCommand`.

-

-### Pipelining

-

-To explain how Hiredis supports pipelining in a blocking connection, there needs to be

-understanding of the internal execution flow.

-

-When any of the functions in the `redisCommand` family is called, Hiredis first formats the

-command according to the Redis protocol. The formatted command is then put in the output buffer

-of the context. This output buffer is dynamic, so it can hold any number of commands.

-After the command is put in the output buffer, `redisGetReply` is called. This function has the

-following two execution paths:

-

-1. The input buffer is non-empty:

- * Try to parse a single reply from the input buffer and return it

- * If no reply could be parsed, continue at *2*

-2. The input buffer is empty:

- * Write the **entire** output buffer to the socket

- * Read from the socket until a single reply could be parsed

-

-The function `redisGetReply` is exported as part of the Hiredis API and can be used when a reply

-is expected on the socket. To pipeline commands, the only things that needs to be done is

-filling up the output buffer. For this cause, two commands can be used that are identical

-to the `redisCommand` family, apart from not returning a reply:

-```c

-void redisAppendCommand(redisContext *c, const char *format, ...);

-void redisAppendCommandArgv(redisContext *c, int argc, const char **argv, const size_t *argvlen);

-```

-After calling either function one or more times, `redisGetReply` can be used to receive the

-subsequent replies. The return value for this function is either `REDIS_OK` or `REDIS_ERR`, where

-the latter means an error occurred while reading a reply. Just as with the other commands,

-the `err` field in the context can be used to find out what the cause of this error is.

-

-The following examples shows a simple pipeline (resulting in only a single call to `write(2)` and

-a single call to `read(2)`):

-```c

-redisReply *reply;

-redisAppendCommand(context,"SET foo bar");

-redisAppendCommand(context,"GET foo");

-redisGetReply(context,&reply); // reply for SET

-freeReplyObject(reply);

-redisGetReply(context,&reply); // reply for GET

-freeReplyObject(reply);

-```

-This API can also be used to implement a blocking subscriber:

-```c

-reply = redisCommand(context,"SUBSCRIBE foo");

-freeReplyObject(reply);

-while(redisGetReply(context,&reply) == REDIS_OK) {

- // consume message

- freeReplyObject(reply);

-}

-```

-### Errors

-

-When a function call is not successful, depending on the function either `NULL` or `REDIS_ERR` is

-returned. The `err` field inside the context will be non-zero and set to one of the

-following constants:

-

-* **`REDIS_ERR_IO`**:

- There was an I/O error while creating the connection, trying to write

- to the socket or read from the socket. If you included `errno.h` in your

- application, you can use the global `errno` variable to find out what is

- wrong.

-

-* **`REDIS_ERR_EOF`**:

- The server closed the connection which resulted in an empty read.

-

-* **`REDIS_ERR_PROTOCOL`**:

- There was an error while parsing the protocol.

-

-* **`REDIS_ERR_OTHER`**:

- Any other error. Currently, it is only used when a specified hostname to connect

- to cannot be resolved.

-

-In every case, the `errstr` field in the context will be set to hold a string representation

-of the error.

-

-## Asynchronous API

-

-Hiredis comes with an asynchronous API that works easily with any event library.

-Examples are bundled that show using Hiredis with [libev](http://software.schmorp.de/pkg/libev.html)

-and [libevent](http://monkey.org/~provos/libevent/).

-

-### Connecting

-

-The function `redisAsyncConnect` can be used to establish a non-blocking connection to

-Redis. It returns a pointer to the newly created `redisAsyncContext` struct. The `err` field

-should be checked after creation to see if there were errors creating the connection.

-Because the connection that will be created is non-blocking, the kernel is not able to

-instantly return if the specified host and port is able to accept a connection.

-```c

-redisAsyncContext *c = redisAsyncConnect("127.0.0.1", 6379);

-if (c->err) {

- printf("Error: %s\n", c->errstr);

- // handle error

-}

-```

-

-The asynchronous context can hold a disconnect callback function that is called when the

-connection is disconnected (either because of an error or per user request). This function should

-have the following prototype:

-```c

-void(const redisAsyncContext *c, int status);

-```

-On a disconnect, the `status` argument is set to `REDIS_OK` when disconnection was initiated by the

-user, or `REDIS_ERR` when the disconnection was caused by an error. When it is `REDIS_ERR`, the `err`

-field in the context can be accessed to find out the cause of the error.

-

-The context object is always freed after the disconnect callback fired. When a reconnect is needed,

-the disconnect callback is a good point to do so.

-

-Setting the disconnect callback can only be done once per context. For subsequent calls it will

-return `REDIS_ERR`. The function to set the disconnect callback has the following prototype:

-```c

-int redisAsyncSetDisconnectCallback(redisAsyncContext *ac, redisDisconnectCallback *fn);

-```

-### Sending commands and their callbacks

-

-In an asynchronous context, commands are automatically pipelined due to the nature of an event loop.

-Therefore, unlike the synchronous API, there is only a single way to send commands.

-Because commands are sent to Redis asynchronously, issuing a command requires a callback function

-that is called when the reply is received. Reply callbacks should have the following prototype:

-```c

-void(redisAsyncContext *c, void *reply, void *privdata);

-```

-The `privdata` argument can be used to curry arbitrary data to the callback from the point where

-the command is initially queued for execution.

-

-The functions that can be used to issue commands in an asynchronous context are:

-```c

-int redisAsyncCommand(

- redisAsyncContext *ac, redisCallbackFn *fn, void *privdata,

- const char *format, ...);

-int redisAsyncCommandArgv(

- redisAsyncContext *ac, redisCallbackFn *fn, void *privdata,

- int argc, const char **argv, const size_t *argvlen);

-```

-Both functions work like their blocking counterparts. The return value is `REDIS_OK` when the command

-was successfully added to the output buffer and `REDIS_ERR` otherwise. Example: when the connection

-is being disconnected per user-request, no new commands may be added to the output buffer and `REDIS_ERR` is

-returned on calls to the `redisAsyncCommand` family.

-

-If the reply for a command with a `NULL` callback is read, it is immediately freed. When the callback

-for a command is non-`NULL`, the memory is freed immediately following the callback: the reply is only

-valid for the duration of the callback.

-

-All pending callbacks are called with a `NULL` reply when the context encountered an error.

-

-### Disconnecting

-

-An asynchronous connection can be terminated using:

-```c

-void redisAsyncDisconnect(redisAsyncContext *ac);

-```

-When this function is called, the connection is **not** immediately terminated. Instead, new

-commands are no longer accepted and the connection is only terminated when all pending commands

-have been written to the socket, their respective replies have been read and their respective

-callbacks have been executed. After this, the disconnection callback is executed with the

-`REDIS_OK` status and the context object is freed.

-

-### Hooking it up to event library *X*

-

-There are a few hooks that need to be set on the context object after it is created.

-See the `adapters/` directory for bindings to *libev* and *libevent*.

-

-## Reply parsing API

-

-Hiredis comes with a reply parsing API that makes it easy for writing higher

-level language bindings.

-

-The reply parsing API consists of the following functions:

-```c

-redisReader *redisReaderCreate(void);

-void redisReaderFree(redisReader *reader);

-int redisReaderFeed(redisReader *reader, const char *buf, size_t len);

-int redisReaderGetReply(redisReader *reader, void **reply);

-```

-The same set of functions are used internally by hiredis when creating a

-normal Redis context, the above API just exposes it to the user for a direct

-usage.

-

-### Usage

-

-The function `redisReaderCreate` creates a `redisReader` structure that holds a

-buffer with unparsed data and state for the protocol parser.

-

-Incoming data -- most likely from a socket -- can be placed in the internal

-buffer of the `redisReader` using `redisReaderFeed`. This function will make a

-copy of the buffer pointed to by `buf` for `len` bytes. This data is parsed

-when `redisReaderGetReply` is called. This function returns an integer status

-and a reply object (as described above) via `void **reply`. The returned status

-can be either `REDIS_OK` or `REDIS_ERR`, where the latter means something went

-wrong (either a protocol error, or an out of memory error).

-

-The parser limits the level of nesting for multi bulk payloads to 7. If the

-multi bulk nesting level is higher than this, the parser returns an error.

-

-### Customizing replies

-

-The function `redisReaderGetReply` creates `redisReply` and makes the function

-argument `reply` point to the created `redisReply` variable. For instance, if

-the response of type `REDIS_REPLY_STATUS` then the `str` field of `redisReply`

-will hold the status as a vanilla C string. However, the functions that are

-responsible for creating instances of the `redisReply` can be customized by

-setting the `fn` field on the `redisReader` struct. This should be done

-immediately after creating the `redisReader`.

-

-For example, [hiredis-rb](https://github.com/pietern/hiredis-rb/blob/master/ext/hiredis_ext/reader.c)

-uses customized reply object functions to create Ruby objects.

-

-### Reader max buffer

-

-Both when using the Reader API directly or when using it indirectly via a

-normal Redis context, the redisReader structure uses a buffer in order to

-accumulate data from the server.

-Usually this buffer is destroyed when it is empty and is larger than 16

-KiB in order to avoid wasting memory in unused buffers

-

-However when working with very big payloads destroying the buffer may slow

-down performances considerably, so it is possible to modify the max size of

-an idle buffer changing the value of the `maxbuf` field of the reader structure

-to the desired value. The special value of 0 means that there is no maximum

-value for an idle buffer, so the buffer will never get freed.

-

-For instance if you have a normal Redis context you can set the maximum idle

-buffer to zero (unlimited) just with:

-```c

-context->reader->maxbuf = 0;

-```

-This should be done only in order to maximize performances when working with

-large payloads. The context should be set back to `REDIS_READER_MAX_BUF` again

-as soon as possible in order to prevent allocation of useless memory.

-

-## AUTHORS

-

-Hiredis was written by Salvatore Sanfilippo (antirez at gmail) and

-Pieter Noordhuis (pcnoordhuis at gmail) and is released under the BSD license.

-Hiredis is currently maintained by Matt Stancliff (matt at genges dot com) and

-Jan-Erik Rediger (janerik at fnordig dot com)

+++ /dev/null

-# LIBUCL

-

-[](https://circleci.com/gh/vstakhov/libucl)

-[](https://scan.coverity.com/projects/4138)

-[](https://coveralls.io/github/vstakhov/libucl?branch=master)

-

-**Table of Contents** *generated with [DocToc](http://doctoc.herokuapp.com/)*

-

-- [Introduction](#introduction)

-- [Basic structure](#basic-structure)

-- [Improvements to the json notation](#improvements-to-the-json-notation)

- - [General syntax sugar](#general-syntax-sugar)

- - [Automatic arrays creation](#automatic-arrays-creation)

- - [Named keys hierarchy](#named-keys-hierarchy)

- - [Convenient numbers and booleans](#convenient-numbers-and-booleans)

-- [General improvements](#general-improvements)

- - [Comments](#comments)

- - [Macros support](#macros-support)

- - [Variables support](#variables-support)

- - [Multiline strings](#multiline-strings)

- - [Single quoted strings](#single-quoted-strings)

-- [Emitter](#emitter)

-- [Validation](#validation)

-- [Performance](#performance)

-- [Conclusion](#conclusion)

-

-## Introduction

-

-This document describes the main features and principles of the configuration

-language called `UCL` - universal configuration language.

-

-If you are looking for the libucl API documentation you can find it at [this page](doc/api.md).

-

-## Basic structure

-

-UCL is heavily infused by `nginx` configuration as the example of a convenient configuration

-system. However, UCL is fully compatible with `JSON` format and is able to parse json files.

-For example, you can write the same configuration in the following ways:

-

-* in nginx like:

-

-```nginx

-param = value;

-section {

- param = value;

- param1 = value1;

- flag = true;

- number = 10k;

- time = 0.2s;

- string = "something";

- subsection {

- host = {

- host = "hostname";

- port = 900;

- }

- host = {

- host = "hostname";

- port = 901;

- }

- }

-}

-```

-

-* or in JSON:

-

-```json

-{

- "param": "value",

- "param1": "value1",

- "flag": true,

- "subsection": {

- "host": [

- {

- "host": "hostname",

- "port": 900

- },

- {

- "host": "hostname",

- "port": 901

- }

- ]

- }

-}

-```

-

-## Improvements to the json notation.

-

-There are various things that make ucl configuration more convenient for editing than strict json:

-

-### General syntax sugar

-

-* Braces are not necessary to enclose a top object: it is automatically treated as an object:

-

-```json

-"key": "value"

-```

-is equal to:

-```json

-{"key": "value"}

-```

-

-* There is no requirement of quotes for strings and keys, moreover, `:` may be replaced `=` or even be skipped for objects:

-

-```nginx

-key = value;

-section {

- key = value;

-}

-```

-is equal to:

-```json

-{

- "key": "value",

- "section": {

- "key": "value"

- }

-}

-```

-

-* No commas mess: you can safely place a comma or semicolon for the last element in an array or an object:

-

-```json

-{

- "key1": "value",

- "key2": "value",

-}

-```

-### Automatic arrays creation

-

-* Non-unique keys in an object are allowed and are automatically converted to the arrays internally:

-

-```json

-{

- "key": "value1",

- "key": "value2"

-}

-```

-is converted to:

-```json

-{

- "key": ["value1", "value2"]

-}

-```

-

-### Named keys hierarchy

-

-UCL accepts named keys and organize them into objects hierarchy internally. Here is an example of this process:

-```nginx

-section "blah" {

- key = value;

-}

-section foo {

- key = value;

-}

-```

-

-is converted to the following object:

-

-```nginx

-section {

- blah {

- key = value;

- }

- foo {

- key = value;

- }

-}

-```

-

-Plain definitions may be more complex and contain more than a single level of nested objects:

-

-```nginx

-section "blah" "foo" {

- key = value;

-}

-```

-

-is presented as:

-

-```nginx

-section {

- blah {

- foo {

- key = value;

- }

- }

-}

-```

-

-### Convenient numbers and booleans

-

-* Numbers can have suffixes to specify standard multipliers:

- + `[kKmMgG]` - standard 10 base multipliers (so `1k` is translated to 1000)

- + `[kKmMgG]b` - 2 power multipliers (so `1kb` is translated to 1024)

- + `[s|min|d|w|y]` - time multipliers, all time values are translated to float number of seconds, for example `10min` is translated to 600.0 and `10ms` is translated to 0.01

-* Hexadecimal integers can be used by `0x` prefix, for example `key = 0xff`. However, floating point values can use decimal base only.

-* Booleans can be specified as `true` or `yes` or `on` and `false` or `no` or `off`.

-* It is still possible to treat numbers and booleans as strings by enclosing them in double quotes.

-

-## General improvements

-

-### Comments

-

-UCL supports different style of comments:

-

-* single line: `#`

-* multiline: `/* ... */`

-

-Multiline comments may be nested:

-```c

-# Sample single line comment

-/*

- some comment

- /* nested comment */

- end of comment

-*/

-```

-

-### Macros support

-

-UCL supports external macros both multiline and single line ones:

-```nginx

-.macro_name "sometext";

-.macro_name {

- Some long text

- ....

-};

-```

-

-Moreover, each macro can accept an optional list of arguments in braces. These

-arguments themselves are the UCL object that is parsed and passed to a macro as

-options:

-

-```nginx

-.macro_name(param=value) "something";

-.macro_name(param={key=value}) "something";

-.macro_name(.include "params.conf") "something";

-.macro_name(#this is multiline macro

-param = [value1, value2]) "something";

-.macro_name(key="()") "something";

-```

-

-UCL also provide a convenient `include` macro to load content from another files

-to the current UCL object. This macro accepts either path to file:

-

-```nginx

-.include "/full/path.conf"

-.include "./relative/path.conf"

-.include "${CURDIR}/path.conf"

-```

-

-or URL (if ucl is built with url support provided by either `libcurl` or `libfetch`):

-

- .include "http://example.com/file.conf"

-

-`.include` macro supports a set of options:

-

-* `try` (default: **false**) - if this option is `true` than UCL treats errors on loading of

-this file as non-fatal. For example, such a file can be absent but it won't stop the parsing

-of the top-level document.

-* `sign` (default: **false**) - if this option is `true` UCL loads and checks the signature for

-a file from path named `<FILEPATH>.sig`. Trusted public keys should be provided for UCL API after

-parser is created but before any configurations are parsed.

-* `glob` (default: **false**) - if this option is `true` UCL treats the filename as GLOB pattern and load

-all files that matches the specified pattern (normally the format of patterns is defined in `glob` manual page

-for your operating system). This option is meaningless for URL includes.

-* `url` (default: **true**) - allow URL includes.

-* `path` (default: empty) - A UCL_ARRAY of directories to search for the include file.

-Search ends after the first match, unless `glob` is true, then all matches are included.

-* `prefix` (default false) - Put included contents inside an object, instead

-of loading them into the root. If no `key` is provided, one is automatically generated based on each files basename()

-* `key` (default: <empty string>) - Key to load contents of include into. If

-the key already exists, it must be the correct type

-* `target` (default: object) - Specify if the `prefix` `key` should be an

-object or an array.

-* `priority` (default: 0) - specify priority for the include (see below).

-* `duplicate` (default: 'append') - specify policy of duplicates resolving:

- - `append` - default strategy, if we have new object of higher priority then it replaces old one, if we have new object with less priority it is ignored completely, and if we have two duplicate objects with the same priority then we have a multi-value key (implicit array)

- - `merge` - if we have object or array, then new keys are merged inside, if we have a plain object then an implicit array is formed (regardless of priorities)

- - `error` - create error on duplicate keys and stop parsing

- - `rewrite` - always rewrite an old value with new one (ignoring priorities)

-

-Priorities are used by UCL parser to manage the policy of objects rewriting during including other files

-as following:

-

-* If we have two objects with the same priority then we form an implicit array

-* If a new object has bigger priority then we overwrite an old one

-* If a new object has lower priority then we ignore it

-

-By default, the priority of top-level object is set to zero (lowest priority). Currently,

-you can define up to 16 priorities (from 0 to 15). Includes with bigger priorities will

-rewrite keys from the objects with lower priorities as specified by the policy.

-

-### Variables support

-

-UCL supports variables in input. Variables are registered by a user of the UCL parser and can be presented in the following forms:

-

-* `${VARIABLE}`

-* `$VARIABLE`

-

-UCL currently does not support nested variables. To escape variables one could use double dollar signs:

-

-* `$${VARIABLE}` is converted to `${VARIABLE}`

-* `$$VARIABLE` is converted to `$VARIABLE`

-

-However, if no valid variables are found in a string, no expansion will be performed (and `$$` thus remains unchanged). This may be a subject

-to change in future libucl releases.

-

-### Multiline strings

-

-UCL can handle multiline strings as well as single line ones. It uses shell/perl like notation for such objects:

-```

-key = <<EOD

-some text

-splitted to

-lines

-EOD

-```

-

-In this example `key` will be interpreted as the following string: `some text\nsplitted to\nlines`.

-Here are some rules for this syntax:

-

-* Multiline terminator must start just after `<<` symbols and it must consist of capital letters only (e.g. `<<eof` or `<< EOF` won't work);

-* Terminator must end with a single newline character (and no spaces are allowed between terminator and newline character);

-* To finish multiline string you need to include a terminator string just after newline and followed by a newline (no spaces or other characters are allowed as well);

-* The initial and the final newlines are not inserted to the resulting string, but you can still specify newlines at the beginning and at the end of a value, for example:

-

-```

-key <<EOD

-

-some

-text

-

-EOD

-```

-

-### Single quoted strings

-

-It is possible to use single quoted strings to simplify escaping rules. All values passed in single quoted strings are *NOT* escaped, with two exceptions: a single `'` character just before `\` character, and a newline character just after `\` character that is ignored.

-

-```

-key = 'value'; # Read as value

-key = 'value\n\'; # Read as value\n\

-key = 'value\''; # Read as value'

-key = 'value\

-bla'; # Read as valuebla

-```

-

-## Emitter

-

-Each UCL object can be serialized to one of the three supported formats:

-

-* `JSON` - canonic json notation (with spaces indented structure);

-* `Compacted JSON` - compact json notation (without spaces or newlines);

-* `Configuration` - nginx like notation;

-* `YAML` - yaml inlined notation.

-

-## Validation

-

-UCL allows validation of objects. It uses the same schema that is used for json: [json schema v4](http://json-schema.org). UCL supports the full set of json schema with the exception of remote references. This feature is unlikely useful for configuration objects. Of course, a schema definition can be in UCL format instead of JSON that simplifies schemas writing. Moreover, since UCL supports multiple values for keys in an object it is possible to specify generic integer constraints `maxValues` and `minValues` to define the limits of values count in a single key. UCL currently is not absolutely strict about validation schemas themselves, therefore UCL users should supply valid schemas (as it is defined in json-schema draft v4) to ensure that the input objects are validated properly.

-

-## Performance

-

-Are UCL parser and emitter fast enough? Well, there are some numbers.

-I got a 19Mb file that consist of ~700 thousand lines of json (obtained via

-http://www.json-generator.com/). Then I checked jansson library that performs json

-parsing and emitting and compared it with UCL. Here are results:

-

-```

-jansson: parsed json in 1.3899 seconds

-jansson: emitted object in 0.2609 seconds

-

-ucl: parsed input in 0.6649 seconds

-ucl: emitted config in 0.2423 seconds

-ucl: emitted json in 0.2329 seconds

-ucl: emitted compact json in 0.1811 seconds

-ucl: emitted yaml in 0.2489 seconds

-```

-

-So far, UCL seems to be significantly faster than jansson on parsing and slightly faster on emitting. Moreover,

-UCL compiled with optimizations (-O3) performs significantly faster:

-```

-ucl: parsed input in 0.3002 seconds

-ucl: emitted config in 0.1174 seconds

-ucl: emitted json in 0.1174 seconds

-ucl: emitted compact json in 0.0991 seconds

-ucl: emitted yaml in 0.1354 seconds

-```

-

-You can do your own benchmarks by running `make check` in libucl top directory.

-

-## Conclusion

-

-UCL has clear design that should be very convenient for reading and writing. At the same time it is compatible with

-JSON language and therefore can be used as a simple JSON parser. Macro logic provides an ability to extend configuration

-language (for example by including some lua code) and comments allow to disable or enable the parts of a configuration

-quickly.

+++ /dev/null

-# Lupa

-

-## Introduction

-

-Lupa is a [Jinja2][] template engine implementation written in Lua and supports

-Lua syntax within tags and variables.

-

-Lupa was sponsored by the [Library of the University of Antwerp][].

-

-[Jinja2]: http://jinja.pocoo.org

-[Library of the University of Antwerp]: http://www.uantwerpen.be/

-

-## Requirements

-

-Lupa has the following requirements:

-

-* [Lua][] 5.1, 5.2, or 5.3.

-* The [LPeg][] library.

-

-[Lua]: http://www.lua.org

-[LPeg]: http://www.inf.puc-rio.br/~roberto/lpeg/

-

-## Download

-

-Download Lupa from the project’s [download page][].

-

-[download page]: download

-

-## Installation

-

-Unzip Lupa and place the "lupa.lua" file in your Lua installation's

-`package.path`. This location depends on your version of Lua. Typical locations

-are listed below.

-

-* Lua 5.1: */usr/local/share/lua/5.1/* or */usr/local/share/lua/5.1/*

-* Lua 5.2: */usr/local/share/lua/5.2/* or */usr/local/share/lua/5.2/*

-* Lua 5.3: */usr/local/share/lua/5.3/* or */usr/local/share/lua/5.3/*

-

-You can also place the "lupa.lua" file wherever you'd like and add it to Lua's

-`package.path` manually in your program. For example, if Lupa was placed in a

-*/home/user/lua/* directory, it can be used as follows:

-

- package.path = package.path..';/home/user/lua/?.lua'

-

-## Usage

-

-Lupa is simply a Lua library. Its `lupa.expand()` and `lupa.expand_file()`

-functions may called to process templates. For example:

-

- lupa = require('lupa')

- lupa.expand("hello {{ s }}!", {s = "world"}) --> "hello world!"

- lupa.expand("{% for i in {1, 2, 3} %}{{ i }}{% endfor %}") --> 123

-

-By default, Lupa loads templates relative to the current working directory. This

-can be changed by reconfiguring Lupa:

-

- lupa.expand_file('name') --> expands template "./name"

- lupa.configure{loader = lupa.loaders.filesystem('path/to/templates')}

- lupa.expand_file('name') --> expands template "path/to/templates/name"

-

-See Lupa's [API documentation][] for more information.

-

-[API documentation]: api.html

-

-## Syntax

-

-Please refer to Jinja2's extensive [template documentation][]. Any

-incompatibilities are listed in the sections below.

-

-[template documentation]: http://jinja.pocoo.org/docs/dev/templates/

-

-## Comparison with Jinja2

-

-While Lua and Python (Jinja2's implementation language) share some similarities,

-the languages themselves are fundamentally different. Nevertheless, a

-significant effort was made to support a vast majority of Jinja2's Python-style

-syntax. As a result, Lupa passes Jinja2's test suite with only a handful of

-modifications. The comprehensive list of differences between Lupa and Jinja2 is

-described in the following sections.

-

-### Fundamental Differences

-

-* Expressions use Lua's syntax instead of Python's, so many of Python's

- syntactic constructs are not valid. However, the following constructs

- *are valid*, despite being invalid in pure Lua:

-

- + Iterating over table literals or table variables directly in a "for" loop:

-

- {% for i in {1, 2, 3} %}...{% endfor %}

-

- + Conditional loops via an "if" expression suffix:

-

- {% for x in range(10) if is_odd(x) %}...{% endfor %}

-

- + Table unpacking for list elements when iterating through a list of lists:

-

- {% for a, b, c in {{1, 2, 3}, {4, 5, 6}} %}...{% endfor %}

-

- + Default values for macro arguments:

-

- {% macro m(a, b, c='c', d='d') %}...{% endmacro %}

-

-* Strings do not have unicode escapes nor is unicode interpreted in any way.

-

-### Syntactic Differences

-

-* Line statements are not supported due to parsing complexity.

-* In `{% for ... %}` loops, the `loop.length`, `loop.revindex`,

- `loop.revindex0`, and `loop.last` variables only apply to sequences, where

- Lua's `'#'` operator applies.